I once worked on a program that collected hundreds of Gbytes of data during 4 and 8 hour flight-based measurements. There was so much data collected that generating backups at the end of an experiment was interfering with flight turn-around time.

Consider: a parallel 16-bit converter clocked at 2 MHz and running four hours straight generates 460e9 bits of data per ½-day of measurement. Per data channel.

![]()

or 53.6 Gbyte per channel. At anywhere between 6 and 10 channels, each 4 hour flight sequence generates a lot of data.

The short-term solution was to use portable hard drives. Even though the use of portable/external drives was not encouraged, it became a necessary means of data storage so that they could be swapped out rather than backed up so as to not interfere with flight operations.

That did not solve the problem of later processing and interpretation all those data sets.

Here’s all the data, so what does it mean?

14 flight days at perhaps 1 Tbyte data per day?

Too much data, not enough people, time, or resources to provide timely interpretation.

Why does all this data need to be collected … ?

Consider the purpose of this measurement: to obtain the average of some surface phenomena from an airplane traveling at roughly 200 m/s. These are intended to be large scale surveys; think of plowing a field – each “rectangular” survey may consist of 16 180-km lines. Assume the final desired spatial data resolution is to be 1km; one survey line will have 180 data points.

Given a velocity of 200 m/s with a required data set of 100 samples per kilometer, a data point needs to be recorded every 10 m or 20 samples per second; a sample every 50 ms. 50ms is a lo-o-ong time for electronic processing. And 10 m data resolution could be questioned as too tight for the final requirement of 1km interpretation resolution.

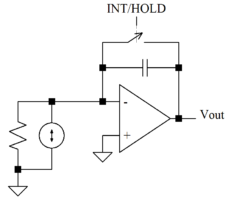

Consider the following ideal integrating network with photodiode source:

The signal current is proportional to the light intensity. The infinite input impedance of the opamp forces the source current into the capacitor, increasing the voltage across the capacitor to increase as:

![]()

where ![]() is defined by the switch period.

is defined by the switch period.

Defining a clock with pulse width of 50 ms (with high duty-cycle), the integrated signal is a voltage which is applied to the ADC which in turn provides a digital word representing the average value of light intensity over a 50 ms period.

Now collecting 20 samples per second (spatial sample point every 10 m) generates:

![]()

The 4 hour flight sequence when pre-processed generates 0.55 Mbytes/channel … a bit more than 1/3 the quantity held by the old 3.5″ floppies … and the data is still suitable for additional processing if desired.

Perhaps this is insufficient data quantity for aficionados of DSP techniques; bump it by a factor of 100: 10,000 samples per km – every half ms, every 100 cm (a bit overkill for 1km resolution, wouldn’t you think? – especially if the photon beam is 10m wide at the surface). 100x more data – taking up 55 Mbyte per flight sequence. A small flash drive? Or stick with the original hard drives and not need to worry about flight turn-around due to excessive back-up time.

The primary goal is to obtain useful information; demonstration of advanced DSP algorithms should be no more than secondary – if that.

That’s good for now.